Trials and Tribulations of AWS EventBridge

EventBridge is the AWS service that allows you to tap into the stream of events taking place within your AWS account. It is the most powerful service within AWS for monitoring your cloud environment but is generally an afterthought as compared to CloudTrail and CloudWatch. It was once apart of the CloudWatch suite of features but was broken off as a standalone service and expanded to form its own path as a multi-purpose event streaming service.

Unlike CloudTail and CloudWatch Logs, EventBridge events are not captured by default and there isn’t an easy way to get a glimpse at the stream of events as they pass. If you fail to capture the events you desire, they will be gone for good.

Things have improved since the CloudWatch days. You can now create an archive of events and play them back for event rule development. They have also made many improvements in event rule functionality. But there are still some gotchas that will trip you up. Here are some things that have tripped me up.

Silent Failures

If there is a problem with an event rule, the event rule will fail its invocation. This is a problem as there isn’t any logging as to what the issue would be. The only evidence of these invocation failures is a metric recorded in CloudWatch and displayed under the event rule’s monitoring tab within the EventBridge interface. To be aware of these rule failures, you would have to setup a CloudWatch alarm. As for diagnosing the issue, if the problem is with the target, you will have to search through Lambda logs or CloudTrail for errors and hope you find something. If the issue is with the configuration of the rule and its pattern matching, you may end up with nothing to work with.

It is a good practice to create a CloudWatch metrics alarm to check for EventBridge invocation failures. This will give you some visibility of any event rule failures. For better visibility, you could setup a dead-letter queue on your event rules and have a Lambda function create log entries from that SQS queue in a CloudWatch Logs log group.

Event Patterns

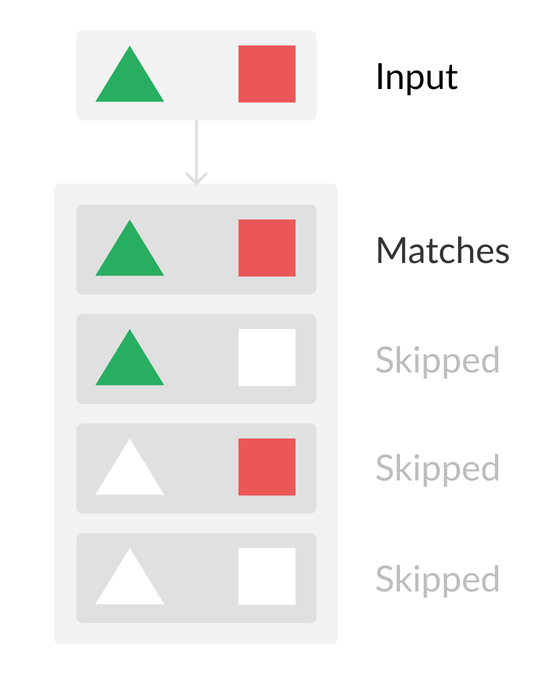

Pattern Content Filters

The recent addition of content filters adds some much-needed flexibility to the pattern matching. I would like to highlight a feature called multiple matching. AWS’ documentation does touch on multiple matching, but only in relation to having a single content filter on multiple keys (properties). It is not possible to match multiple content filters on the same key, such as matching a prefix and a suffix. Attempting to use the same key more than once will cause only the last one to use evaluated. They now have a note mentioning this behavior. For the moment, there is no way to perform an all-of match against a single key. The reason for this might be down to how content filters are not delineated from regular key names and could cause conflicts. I suspect the EventBridge team have backed themselves into a corner with this design.

NOTE: The following examples do not work

This will return the error ‘Event pattern is not valid. Reason: “prefix” must be an object or an array’.

"object": {

"key": {

"suffix": ".csv",

"prefix": "folder/"

}

}

This will treat prefix and suffix as keys and always fail to match.

"object": {

"key": {

"suffix": [".csv"],

"prefix": ["folder/"]

}

}

It is also not possible to pass a list of values to a content filter.

"object": {

"key": [

{

"suffix": [ ".csv", ".json"]

}

]

}

With the recent addition of the “$or” functionality, which uses a “$” character to delineate it from key names. This deviates greatly from all other event pattern functionality and show a re-think on how advanced functionality is defined. We will likely see future content filters and other features being prefixed with ‘$’. There is no way to fix the current content filters without breaking backwards compatibility. They will need to release a version 2 for event pattern syntax.

The good news is that it is possible to perform an any-of match on a list of content filters for a single key. This doesn’t help us with the use case above, but does allow for some other ones that are beneficial.

The following will match on the suffix or prefix, but not both:

"object": {

"key": [

{

"suffix": ".csv"

},

{

"prefix": "folder/"

}

]

}

You can duplicate content filters, unlike keys. This now allows us to match both suffixes:

"object": {

"key": [

{

"suffix": ".csv"

},

{

"suffix": ".json"

}

]

}

The AWS documentation never gives an example of using more then a single content filter object within a key’s value list. You can actually specify multiple content filter objects and even mix them with plain text values. As long as one of the content filters or text values matches, the event will match the pattern.

This undocumented ability of multiple-matching is inherited from the base functionality where providing a list of text values within [] brackets will default to match any-of instead of all-of. If the event property matched one of the items in the key’s value list, the event will match the pattern. The fact that content filter are treated the same as text values when it comes to potential values is a hidden bonus.

This discovery of using multiple content-based filters on a single key fixed an edge case issue that was causing me to give up on complex pattern matching within the event rules and re-create my own pattern matching engine in a Lambda function. The following example is how you can match a path that may not always exist.

Let’s say you have a specific “deployment-role” IAM role whose events you want to ignore. Well, you would use an “anything-but” content filter to exclude it. What happens if you have an AWS account with IAM users that don’t assume roles? Well, the events caused by an IAM user will not contain the same “sessionIssuer” section as an event caused by someone assuming an IAM role. If the path does not exist, “anything-but” will fail to match events caused by the IAM user. A value at the specified path is required to be there to have that content filter match. Strangely, when one of those events occur, the event rule will not just skip the event, but will actually fail the invocation.

To solve this problem, you can use multiple matching and add an {“exists”: false} content filter into the same list as the “anything-but” content filter. As long as one of those content filters is true, the event will match the pattern.

{

"detail-type": ["AWS API Call via CloudTrail"],

"source": ["aws.ec2"],

"detail": {

"eventName": ["CreateSecurityGroup", "DeleteSecurityGroup", "AuthorizeSecurityGroupIngress", "AuthorizeSecurityGroupEgress", "RevokeSecurityGroupIngress", "RevokeSecurityGroupEgress"],

"eventSource": ["ec2.amazonaws.com"],

"userIdentity": {

"sessionContext": {

"sessionIssuer": {

"userName": [

{

"anything-but": ["deployment-role"]

},

{

"exists": false

}

]

}

}

}

}

}

When an IAM user causes an event, the username property is found under “sessionContext”. The “sessionContext/sessionIssuer” section is empty. When an IAM role causes an event, the username property is found in “sessionContext/sessionIssuer”. This highlights how not just cloudTrail events, but many other AWS events do not adhere to a strict structure. Properties will move around and identifiers will change. Another example of this is where an iam:CreatePolicy event will return a policyName attribute while an iam:DeletePolicy and all other policy events will return a policyArn attribute instead. You might think the trick above could solve this issue, but it can’t. You could potentially match events where neither username paths exist. This brings us to another limitation with event patterns, there isn’t support for conditionals or string manipulation to solve these complex but common situations. If you want to consistently extract certain details without running into invocation failures, you will need to send the event to a Lambda function for pre-processing and transformation.

Events With Lists

Occasionally you will run into an event with a list of objects. This is actually a common occurrence with CloudTrail as it will return a list of affected resources within the responseElements path. Let’s say you want to match if a specific resource has been affected. You would expect to be able to do a matching of an attribute with the object of that resource list. Well, it is not possible to do that. You are not able to drill into a list of objects to do a match for a specific object. With event rule patterns, once you run into a list, you can’t go any further.

Input Transformer

Illegal paths

Like I mentioned above, events do not follow a strict structure. Some events will contain properties that others will not. Sometimes an AWS service will return different identifiers depending on the event. An example of this is when one event returns an ARN while the other returns a resource name.

These differences between AWS events structures are little nightmares when working with the Input Transformer. Input Transformer requires that all paths, specified in the input paths JSON, exist. If any of them do not, your event rule invocation will fail, silently. This is where a coalesce style function or conditionals would be useful. As done with event patterns, adding functions to JSON based templates is difficult, but not impossible. Hopefully there are solutions in the future for allowing missing paths or pulling a value from the first available path (coalesce).

To get around this, you would have to create highly specific event rules that match each type of event you would want to react to, pass whole sections of the event through or pass the unaltered event to something else to process. Doing this may cause you to sometimes run into the dynamic data limit mentioned below. This only leaves you with option of sending the full event to a Lambda function for pre-processing and transformation.

Dynamic Data

Some data will cause the input transformer to break. An example is an embedded policy document which happens on IAM policy change events. When modifying an IAM policy, a copy of the document is included within the requestParameters and/or responseElements sections. If you try to pass in the full event or section containing the document as a property, it will cause the invocation to fail. The AWS documentation lists a value length limit of 256 characters, but that doesn’t appear to be the same as the computed value length limit, as I have far exceeded that without issue. There is either a special character or a content length issue. I am not sure as I have no error message to work with, only theories. As you are not able to manipulate the JSON object within the input paths, you will be forced to give up on the import transformer and send the unaltered matched event to a Lambda function for pre-processing and transformation. Are you seeing a pattern here?

Matched Event

If you do away with the Input Transformer and go back to using matched events, your Lambda function target will have to rely on the contents of the original event to identify and direct it to its final destination. With matched event, you will not have the luxury of a rule name, additional attributes, or tags to help you. This breaks the practice of using a generic Lambda function for processing events from multiple event rules and sources. You may need to create separate Lambda functions to handle events from every AWS service you work with and possibly every destination.

Let’s say you want code pipeline events to go to the developers, EC2 and ECS events to go to the cloud infrastructure team, or VPC changes to the security team. You would need to have separate Lambda processing functions for each event rule or build a single complex Lambda function that uses a lookup table (DynamoDB) to help identity the event, transform it, determine which team the event message should be sent to, and how the message should look. If you get to this point, you have effectively recreated EventBridge’s event rules with the functionality you needed.

Eventual Consistency

This isn’t as much of an issue as it is a fact of life. Your event rule may not fire when the event actually happens. Sometime AWS has issues where the event bus stream is delayed. In those cases, the events will eventually get posted to the bus and your event rule could trigger many hours after when it should have. There is also a chance an event could be duplicated, or your rule gets triggered twice. You may need to think of these edge cases when planning your event rules. If you have a rule starting an EC2 instance or ECS task on a matching event, you could end up with a bit of a mess.

In addition to event triggered rules, classic schedule event rules are also affected by EventBridge’s eventual consistency, and your rules may not trigger at the time you expected.

They have recently added new functionality under the rule trigger configuration to specify a shorter retry window and the maximum number of retry attempts. They also have added support for a dead-letter queue for collecting failed invocations.

Scheduler

AWS has built a new EventBridge Scheduler to replace the scheduled event rules and centralize scheduling of events. It is a completely separate service under the hood with its own API interface and quotas, but officially it is still housed under the EventBridge umbrella. It has the same new features mentioned above that help deal with eventual consistency. In addition, there is also support for a flexible start window, time-zones, and grouping.

Troubleshooting

Previously, troubleshooting event rule issues was nearly impossible as there wasn’t any event history to work with, unlike CloudTrail. If you wanted to get a snapshot of the events you were trying to match, you had to make an event rule with a generic pattern and target a Lambda function to dump the event details to a CloudWatch log group. The new archive/replay functionality of EventBridge doesn’t provide any visibility, but it does allow you to replay those captured events from within EventBridge. Having a list of captured events is useful for testing event rules, but it doesn’t make troubleshooting effortless as not all causes of a failed event rule invocation will produce an error event in CloudTrail. The recent addition of the dead-letter queue may help a bit more with troubleshooting. There still isn’t a single pain of glass to help you with troubleshooting event rule issues. You will need to build your own monitoring and perform a lot of trial-and-error to solve invocation issues.

Pro tip: One of the first event rules you should create is one that alerts you on event rule invocation failures. Without custom alerting there is no way to monitor that your event rules are working without checking CloudWatch metrics and CloudTrail logs.

Conclusion

For many years, EventBridge was another section under CloudWatch and its pattern matching engine was very basic to not that effective. Since the split, they have added many new features to the EventBridge service such as streams, archive/replay, schema registry, and pipes. In addition to those features there were also a lot of improvements made to the pattern matching engine and expanding the list of trigger destinations. Some recent changes have allowed me to strike off some of the issues on my list, but, as outlined above, there is still room for improvement when it comes to debugging, error handling, pattern matching, and the input transformer.

About James MacGowan

James started out as a web developer with an interest in hardware and open sourced software development. He made the switch to IT infrastructure and spent many years with server virtualization, networking, storage, and domain management.

After exhausting all challenges and learning opportunities provided by traditional IT infrastructure and a desire to fully utilize his developer background, he made the switch to cloud computing.

For the last 3 years he has been dedicated to automating and providing secure cloud solutions on AWS and Azure for our clients.