AWS Networking Considerations and Recommended Practices

With AWS, the networking can be hard to grasp for someone who isn’t a networking expert. To get everything running reliably it generally requires additional networking infrastructure resources that can incur a hefty additional cost for a startup or a development environment. AWS doesn’t provide basic or development tiers on its networking resources. They provide one-size-fits-all building blocks that are designed to meet the scaling and redundancy requirements expected by large enterprise businesses.

If you are a startup, starting out, your application may not have a lot of traffic. Any outages experienced would probably go unnoticed or be more acceptable by your initial clients. It is the same when running an internal development or staging environment. Instead of swallowing those extra costs some choose to skip setting up their networking correctly by giving everything public IP addresses and routing unencrypted traffic externally over the internet. This will make them easy targets for malicious actors and won’t provide them any redundancy if AWS has a problem. Below I will provide details on how the networking works, why it is need, and give some pointers on ways to reduce costs.

Load-Balancers

The first big expense you will run into is the load-balancer. You are probably hosting your application on a T-series EC2 instance or ECS Fargate task. Adding an application load-balancer in front of your application will generally double the cost of AWS infrastructure. This is one of the reasons why many startups are found running EC2 instances with public IP addresses. These instances generally don’t have an active patching service, and many will be poorly patched. Being directly exposed on the internet makes them an easier target.

AWS provides free SSL certificates that will integrate with the ALB load-balancing service. These free Certificate Manger certificates are not available for use within an EC2 instance. Instead, you will have to manage you own certificates or implement a “Let’s Encrypt” solution.

While not a big expense for established companies, load-balancers can add up if you are setting up dedicated application load-balancers for each application in each environment. If you have multiple applications, you will want to try and re-use the same application load-balancer as much as possible. You can use listeners to route traffic to different ports, domain names, or even paths to direct traffic to different applications.

NAT Gateways

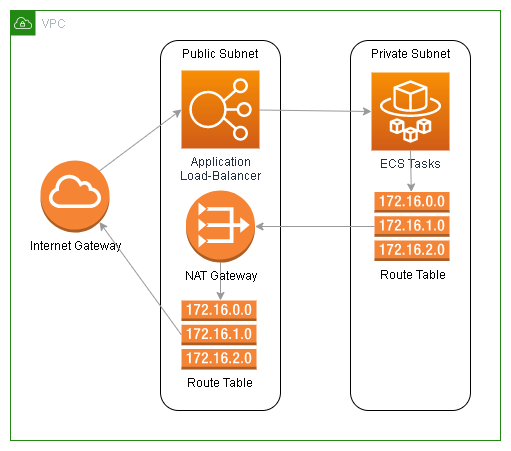

The next big expense are NAT gateways. To help protect important services, you will want to attach them to subnets that you intend to be private. What determines if a subnet is public or private comes down to what their default route points to. Each subnet will have a route table associated with it. In that route table there will be a fixed local routing rule making it possible for anything in the VPC to talk to other systems within that VPC, by way of its CIDR range. The CIDR range is the range of available IP address that can be assigned to network interfaces attached to your VPC.

The other routing rule usually found in a routing table is the default route that matches 0.0.0.0/0. This is a catch all match for all IP addresses that don’t match any other routing rules. If there isn’t a rule that is a better match, the default route will be used to decide where to send your traffic. A public subnet will have a default route that points to the internet gateway. A private subnet will have a rule point to a NAT gateway or none at all.

While routing tables are used for determining where to send network traffic. They technically have no affect on traffic coming into your subnet from outside. They do affect traffic going back to an external source, though. This is how the default routing rule, pointing to an internet gateway, can make the difference between public and private.

To make a subnet truly private or isolated, you would remove the default routing rule. Without a default route, traffic that didn’t find a matching route will be dropped. There would be no way for it to get to the internet. If you want to block inbound internet traffic, but allow your outbound traffic to access the internet, you would set the default route to point to a NAT gateway. This indirectly blocks inbound access to anything with a public IP address as the return traffic can’t find a way back. The NAT gateway will block any return traffic and only allow traffic matching another routing rule.

Public IP addresses are critical for allowing access from the internet to your service, but they are also critical for allowing your service to access the internet. Anything on a public subnet will require a public IP address for outbound access, as the traffic coming back needs an IP address to return to. If you deploy a resource on a public subnet without a public IP address, it is effectively isolated with no exposure to the internet. With a NAT gateway, the public IP address of the NAT gateway will represent your service on the internet. That is why your systems can work without a public IP address in a private subnet, but require one when they are in a public subnet.

The NAT gateway’s public IP address will represent your EC2 instances, ECS tasks, and any VPC endpoint representing an AWS service. Nothing in a private subnet should have its own public IP address, if you want to keep it private. NAT gateways have another added benefit as they reduce the number of external IP address your outbound traffic will come from. This is useful in case a third-party integration service requires a list of your public IP address to whitelist.

For redundancy and network efficiency reasons, you would deploy a NAT gateway for each availability zone (AZ) you have subnets for. This can be expensive if you don’t require redundancy or have a lot of traffic. An example would be an internal development or staging environment. To cut costs, you can run a single NAT gateway and route the default traffic from all subnets to the single NAT gateway. This will create extra cross-availability zone traffic, though. AWS likes to avoid this type of traffic and will charge you for it. The cost is minimal, and it would take a lot of traffic to have its costs out-weight the cost of an additional NAT gateway.

VPC Endpoints

The third big expense are VPC endpoints. The reason why you would use endpoints are varied.

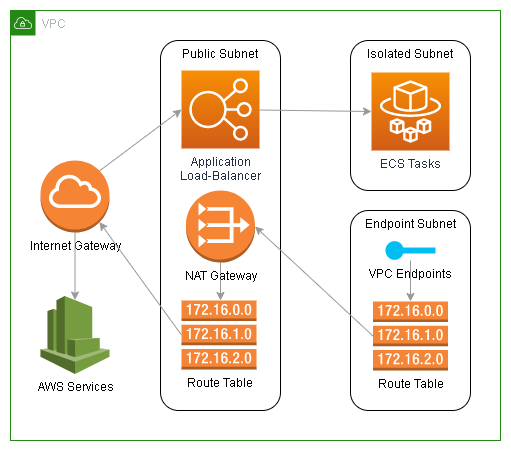

- You may want to ensure all traffic between your applications and AWS services is protected and does not leave your network.

- You may want to restrict access to your applications, while still granting access to an AWS service.

- You may have an application that you need to keep isolated (truly private) without access to the internet.

The use of VPC endpoints can be a partial substitute for NAT gateways. If you only need a couple, they will be cheaper. If you need many, they can be more expensive. Opting for VPC endpoints instead of NAT gateways doesn’t solve your public IP address issue. You would need to have public IP address on all systems that need to access AWS or any other external service except those covered by the VPC endpoints.

When a VPC interface endpoint is created, a network interface will also be created in each subnet you assign it to. These network interfaces will not come with a public IP address and cannot be modified. When these AWS services need to access anything, in relation to your environment, they will use these network interfaces created by the VPC endpoints. If you VPC endpoints don’t have their own access to the internet they will fail. To solve this, you will need to add additional VPC endpoints for other AWS services they rely on. Because of this, it soon becomes a requirement to deploy a NAT gateway along-side your VPC interface endpoints.

An example would be if you deployed an ECS task in an isolated subnet that doesn’t have a public IP address or a default route. It would require 2 VPC endpoints for ECR (api and dkr), 2 for CloudWatch (monitoring and logs), a gateway endpoint for S3, and probably additional endpoints for SSM or Secrets Manager. If some of the traffic between those AWS services is not sensitive and doesn’t need the additional cost of the VPC endpoint, you could get away with not creating them by putting all your VPC endpoints into a subnet with internet access through a NAT gateway.

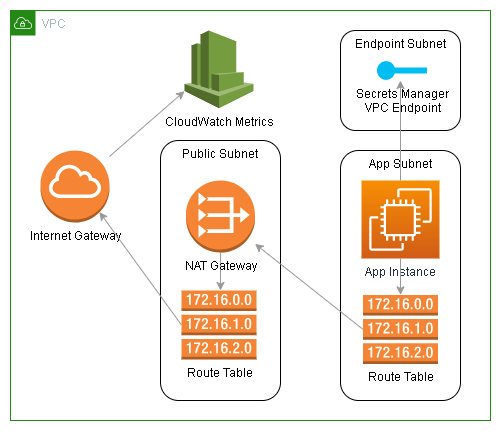

From the above list of endpoints, let’s say you don’t need to secure the CloudWatch metrics and ECR endpoints, as you don’t have any sensitive data in your ECR images. If you put all of the other VPC endpoints into a NAT gateway subnet you could get away with not creating those additional VPC endpoints because the endpoints you did create can access those AWS services through the NAT gateway.

This does not just apply to VPC endpoints, but also includes any AWS services you would attach to a VPC such as Lambda, SageMaker, managed Grafana, and a bunch of others I am forgetting at this moment. The network interfaces created by these services, when attached to your VPC, will not have public IP address. They will not have access to other AWS services without additional VPC endpoints for those other AWS services. If you attach their network interface to a subnet with a NAT gateway, that problem goes away.

All of the above VPC endpoint discussion has been on interface type VPC endpoints. VPC gateway endpoints are much different in how they work. Instead of a network interface, they use a routing rule to route traffic. You can’t add a rule to a single route table and expect everything in your VPC to be able to access the service. You would need to add a routing rule to each route table of every subnet that needs access to the AWS service. If you miss a routing table, access to the service from within that subnet will be broken as AWS DNS will now be resolving the service’s URLs to the local VPC gateway endpoint, but you would have no route to get there. Because of this, if you need access to an AWS gateway service within your isolated subnet you will need to create a routing rule in the route table for that subnet. S3 is a perfect example of an AWS service that uses a VPC gateway endpoint. If you want to make S3 access private, all your subnets will need a routing rule to the S3 VPC gateway endpoint or else they would lose all access to S3.

Circling back, if all you have are public subnets, everything you attach to the VPC will need a public IP address to access the internet or any other AWS service. If you can’t create a public IP address, they will not function correctly. If you attach AWS services or VPC interface endpoints to your VPC you will need to attach them to a subnet with a NAT gateway or they will require additional VPC endpoints which may require even more additional VPC endpoints.

Just like NAT gateways in a development or staging environment, VPC endpoints do not need to be attached to subnets in every availability zone. You can reduce your costs by attaching them to a single availability zone and all traffic will route to that single network interface automatically.

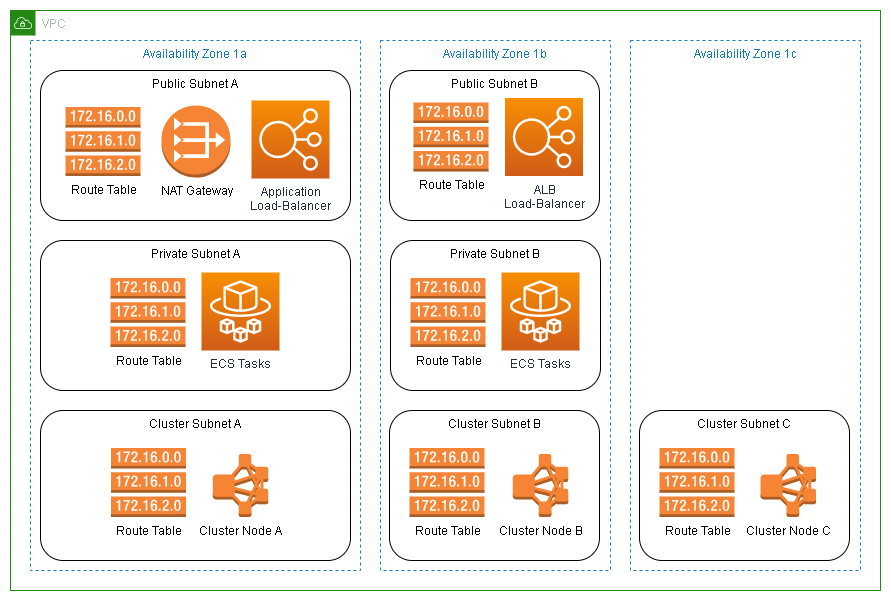

Availability Zones

The last big expense is not a direct cost, but it can amplify the costs of everything mentioned above. That is the number of availability zones (AZs) you choose to use. Almost all AWS regions have at least 3 AZs. There may be couple of regions left that only have 2 AZs. The more popular regions can have up to 6 AZs. Some automated deployment scripts I have seen will deploy their VPCs using all availability zones available. If they are also deploying NAT gateways and VPC endpoints, in the recommended fashion, you could end up tripling your costs for no gain. In 95% of cases, you will not need more then 2 availability zones.

Only when you need to run a 3-node cluster would use need 3 AZs for proper redundancy. Some AWS services that make use of proper clusters are Elasticsearch, RDS Aurora, EKS, EMR, etc. Even with 3 AZs, you can priorities the first 2 AZs and eliminate the additional redundancy costs of the third one. How would you do that? Well, anything that you deploy that only requires 2 AZs you would only attach to the first 2 AZs. Anything you needs to use all 3, you would make sure you set its default to one of the first 2 AZs.

Some of these services have a default AZ option. With EKS, you can set use node affinity to priorities your preferred AZs. You could also create a separate subnet for clusters that uses all 3 AZs while all other subnets use only 2. Anything running in the first 2 AZs that talks to clusters running across all 3 AZs will prioritize the nodes running in their same AZ. You can effectively reduce your traffic hitting the last AZ and only use it for redundancy.

With clustering, the third AZ is only needed for determining if the remaining node(s) have become isolated or are the survivors. The cluster would fail if the first two AZs became unavailable. If a node can’t talk to the other nodes, it knows it is isolated and will stop serving traffic. If a node can talk to another node, it knows it is not isolated and will continue to operate. Redundant systems that only have 2 nodes can never determine if they are isolated and therefore will continue serving traffic. If both nodes think they are the active node, they could become out of sync during a network outage.

Conclusion and Recommended Practices

- Re-use you application load-balancers whenever you can.

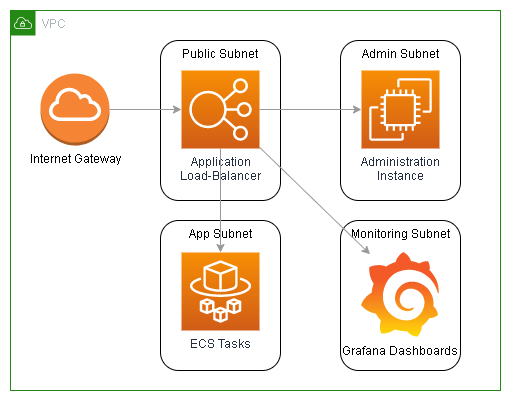

- Every VPC network design should include a public and a private subnet.

- You can get away with a single NAT gateway if you have light traffic and are willing to accept an outage to outbound traffic.

- You can get away with a single VPC interface endpoint if you have light traffic and are willing to accept an outage to the AWS service.

- Only NAT gateways, load-balancers, and services that needs to be publicly accessible should be attached to the public subnet. EC2 instances should be behind a firewall or shared load-balancer.

- Only resources in public subnets should have public IP addresses.

- VPC endpoints and other VPC attached AWS services should only be attached to private subnets that routing through a NAT gateway.

- Initially deploy your VPCs to use 2 availability zones. If you need to add a third AZ, don’t deploy a NAT gateway or VPC endpoint network interfaces for the additional AZ. If you can, prioritize traffic to the first 2 AZs.

About James MacGowan

James started out as a web developer with an interest in hardware and open sourced software development. He made the switch to IT infrastructure and spent many years with server virtualization, networking, storage, and domain management.

After exhausting all challenges and learning opportunities provided by traditional IT infrastructure and a desire to fully utilize his developer background, he made the switch to cloud computing.

For the last 3 years he has been dedicated to automating and providing secure cloud solutions on AWS and Azure for our clients.