Notes on Azure Databricks Automation

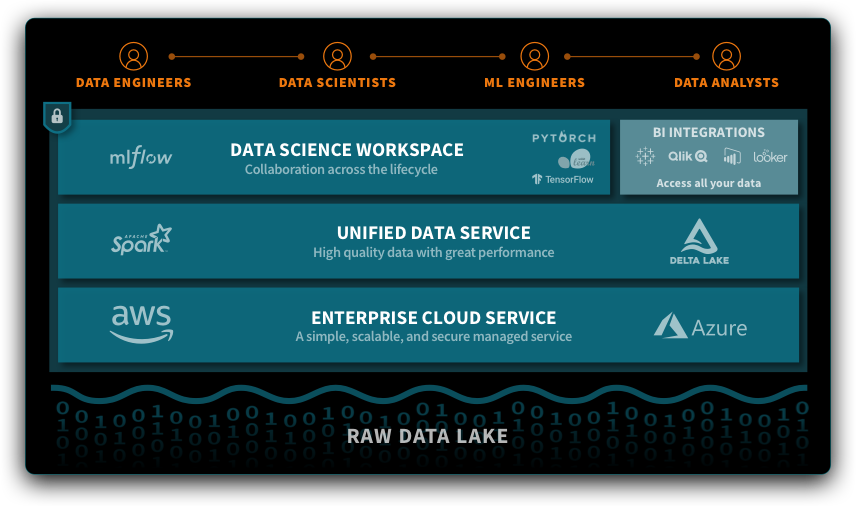

The best explanation for databricks is that it is a commercial offering of spark implementations. If you are doing anything in the big data or ML world, you are likely using (or have used) some type of processing engine for large datasets. Effectively, spark is a distributed data processing engine that is built for speed, ease of use, and flexibility.

Databricks takes spark to the next level by bundling in cluster and job automation. Bundled with connectors, custom-built libraries, and other features, databricks aims to be the “unified platform for massive scale data engineering and collaborative data science”.

Databricks itself can be run on multiple cloud platforms, however, in this post we will focus on Azure databricks. You can find out more about databricks by visiting https://databricks.com/product/unified-data-analytics-platform

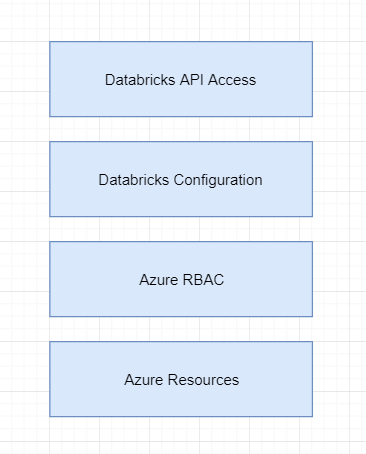

From an azure perspective, here are the following high-level building blocks for an Azure databricks deployment.

Obviously, Azure resources is going to be the first component in your stack. You will likely want to create a resource group for appropriate permission and lifecycle management. Databricks are organized by workspaces, and, luckily, they can be created via ARM template. You can find the schema reference https://docs.microsoft.com/en-us/azure/templates/microsoft.databricks/allversions

When deciding on how many databricks workspaces to create, you’ll need to consider the following:

- Databricks workspace scoped resources, such as mounts and secrets, are available to all users within a databricks workspace

- Limits on number of workbooks, cluster, and others elements that can be created

- Potential division between interactive workspaces (users sharing notebooks/code) vs job related workspaces (more for production pipelines, etc)

From a security perspective, you will likely want to target VNET Injected workspaces.

This feature allows you to deploy the clusters associated with your databricks in your own virtual network. This then allows you to make use of other azure components, such are network security groups, service endpoints, private endpoints, etc, to secure your overall big-data infrastructure.

From an Azure RBAC perspective, you’ll want to make sure only appropriate users have access to the resource. A couple of notes:

- If you want users to access the databricks workspace from the Azure portal, you will want to grant them reader access to the Azure databricks resource itself

- Anyone with a contributor role assignment on the resource will automatically be added as an admin in the databricks workspace. This obviously has implications later on, especially when you start to analyze the databricks workspace API permissions

From a security perspective, you’ll want to limit the amount of contributors on the resource itself. This is pretty typical security advice.

For the databricks API part, databricks has provided a CLI tool to assist with post workspace configuration activities. You can read more about the current state of the API here.

Personally, I’m not a fan of the toolset as it is currently built. I built my own in powershell where I could properly secure credentials, have additional convenience methods, and package functionality for downstream use. The CLI tool, at time of writing, uses personal access tokens that need to be created in the databricks workspace, and assigned to a particular user. There is a way to use AAD tokens, which is currently in some form of preview, so look for that in the future.

Once you have things going, you’ll likely want to look at clusters, instance pools, and workspace(directories) from a configuration standpoint.

The last component is the databricks API access. Databricks workspaces have their own concept of users and groups, and then you can further assign those users and groups specific permissions in the workspace itself. The API sits outside of the Azure landscape, so you will also likely want to control who has access to the API (and from where). A couple of notes:

- As mentioned above, contributor at the Azure RBAC level gives “admin” privileges in the workspace, so be careful of that

- Take a look at SCIM integration, which allows you to configure users/groups to automatically sync to desired workspaces

- Take a look for a preview of the permissions API, which will allow you to control/set permissions on workspace resources at set up time (and as part of the automation)

In conclusion, while this isn’t an exhaustive blog post, I’m hoping that you got some understanding of the steps required to automate your databricks setup. As with everything, there are architectural and security considerations that should factor in to your design.

About Shamir Charania

Shamir Charania, a seasoned cloud expert, possesses in-depth expertise in Amazon Web Services (AWS) and Microsoft Azure, complemented by his six-year tenure as a Microsoft MVP in Azure. At Keep Secure, Shamir provides strategic cloud guidance, with senior architecture-level decision-making to having the technical chops to back it all up. With a strong emphasis on cybersecurity, he develops robust global cloud strategies prioritizing data protection and resilience. Leveraging complexity theory, Shamir delivers innovative and elegant solutions to address complex requirements while driving business growth, positioning himself as a driving force in cloud transformation for organizations in the digital age.